Answer just a few questions and we'll have you matchedwith your perfect Companion Robot!Click now for your FREE custom report!

Active Listening in Companion Robots: The Technology Behind Empathetic AI

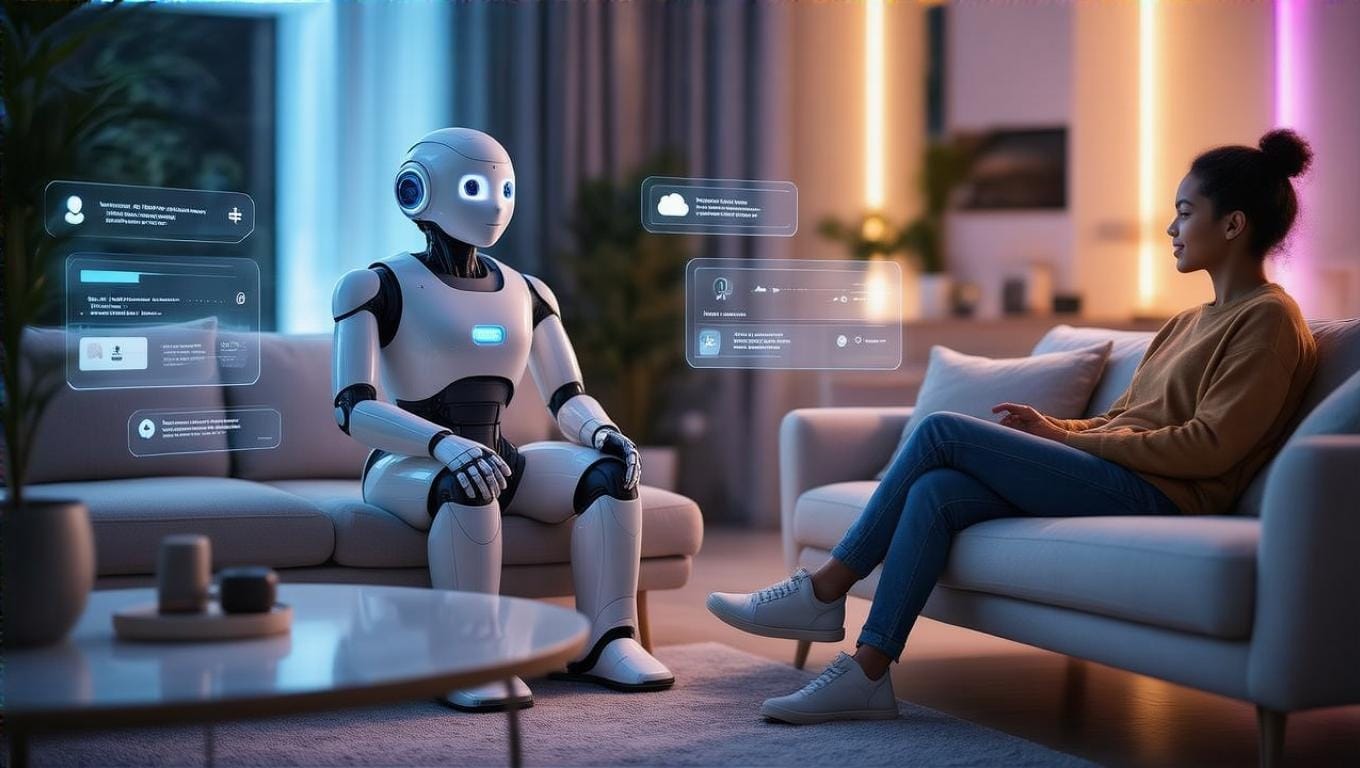

How Active Listening Robots Are Revolutionizing Companion AI

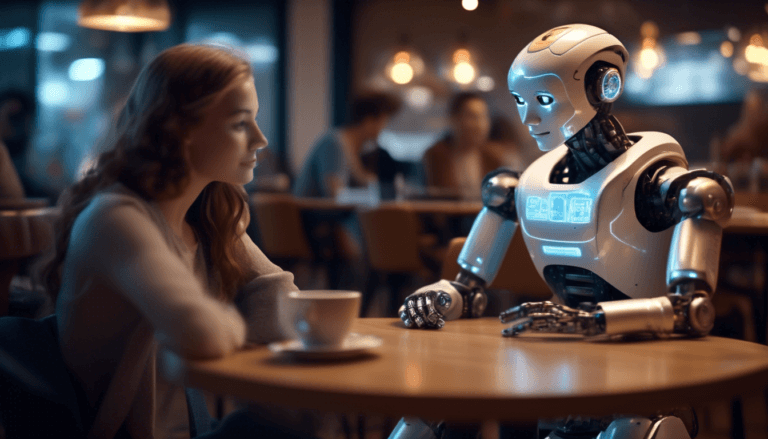

Ever felt like you’re talking to a wall when chatting with a virtual assistant? Well, buckle up, because the world of AI is about to get a whole lot more attentive. Active listening robots are here, and they’re not just nodding along while secretly planning their next move in robot chess.

The Rise of Empathetic AI

Remember when AI was about as emotionally intelligent as a toaster? Those days are toast. Companion robots with active listening capabilities are the new hot ticket in the tech world. These digital darlings are designed to hang on your every word, and not in that creepy way your ex used to.

Active listening robots are the result of some serious tech wizardry. They’re equipped with advanced natural language processing, machine learning algorithms, and enough sensors to make a smartphone blush. But what really sets them apart is their ability to pick up on the subtle nuances of human communication – you know, the stuff that usually flies right over your friend’s head when you’re trying to hint that it’s time for them to leave your house party.

How Active Listening Robots Work Their Magic

So, how do these silicon-based shrinks actually work? Let’s break it down:

Speech Recognition: First, the robot needs to understand what you’re saying. It’s like having a really good ear, but without the actual ear.

Natural Language Processing: This is where the robot deciphers the meaning behind your words. It’s like having a translator for human-speak.

Emotion Detection: Through voice analysis and facial recognition technologies, the robot can pick up on your emotional state. It’s like having a friend who actually notices when you’re upset instead of asking if you want pizza.

Contextual Understanding: The robot considers the broader context of the conversation. It’s not just hearing words; it’s understanding the story.

Response Generation: Based on all this info, the robot crafts an appropriate response. It’s like having a witty comeback generator, but hopefully less snarky.

The Tech Behind the Talk

Let’s get a bit nerdy for a moment. The core of active listening robots is a complex web of algorithms and neural networks. These systems are trained on massive datasets of human conversations, allowing them to recognize patterns and nuances in speech.

According to a report on robotic auditory systems, the latest active listening AI models can process and respond to human speech in milliseconds, making conversations feel natural and fluid. It’s like talking to a super-smart friend who’s had way too much coffee.

Real-World Applications of Active Listening Robots

Active listening robots aren’t just cool tech toys. They’re finding their way into various fields:

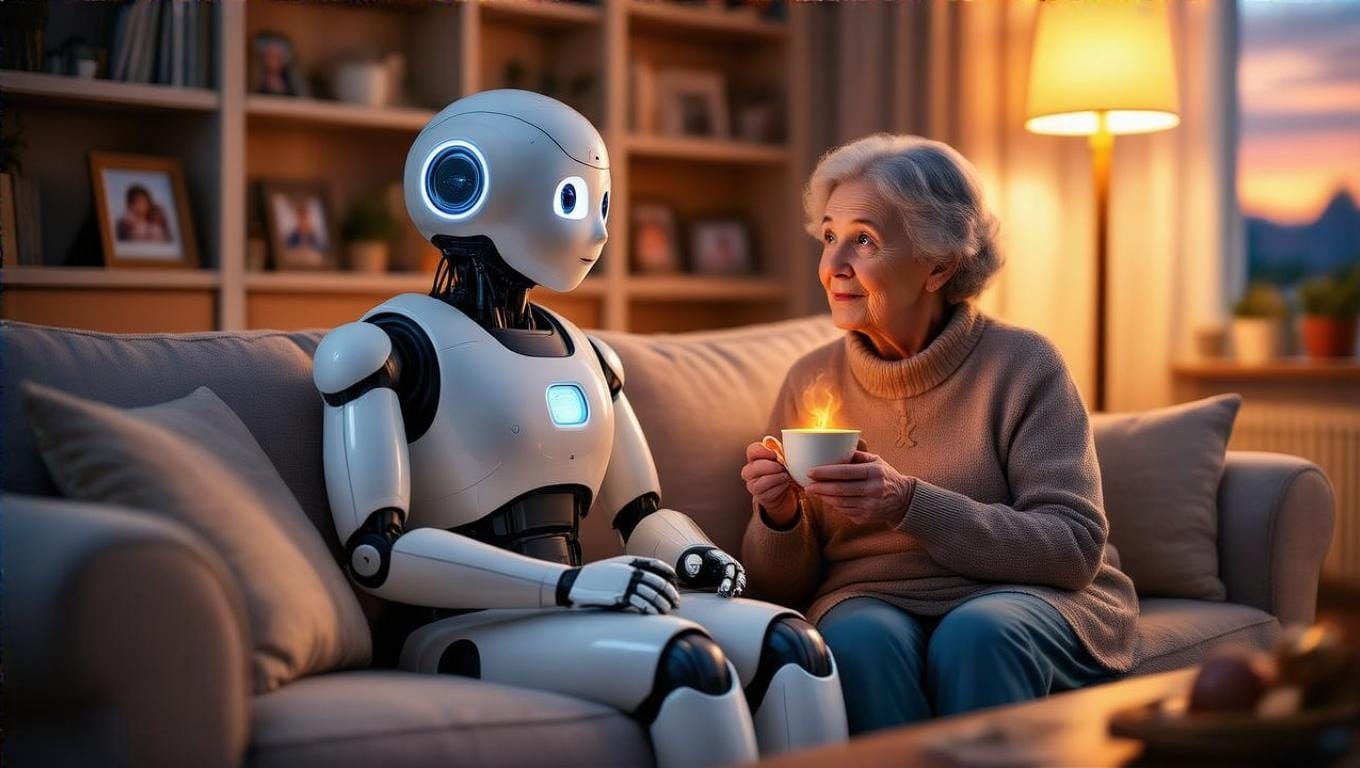

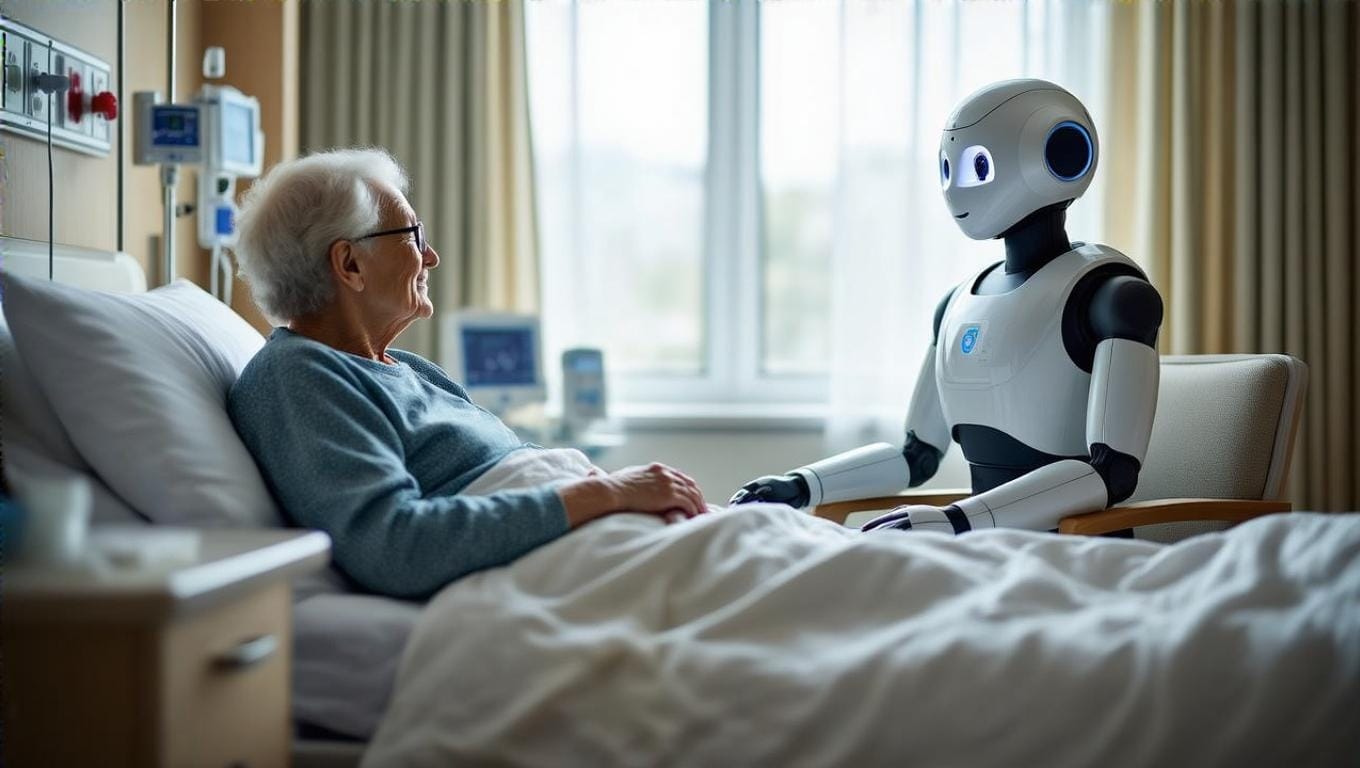

Healthcare: Companion robots are helping elderly patients combat loneliness and monitor their health. It’s like having a grandkid who never gets bored of hearing the same stories.

Mental Health: AI therapists are providing 24/7 support for people dealing with anxiety and depression. It’s like having a therapist who doesn’t charge you for going over your allotted time.

Education: Robots are assisting in language learning and providing personalized tutoring. AI is being increasingly used by educators to offer tailored learning experiences and emotional support in classrooms.

Customer Service: AI chatbots with active listening skills are revolutionizing customer support. It’s like talking to a rep who actually wants to help you, not just get you off the phone.

The Human Touch in Machine Listening

Now, you might be thinking, “Can a robot really understand me better than my bestie?” Well, not exactly. While active listening robots are impressive, they’re not replacing human connections anytime soon.

What they can do is complement human interactions. Think of them as emotional support robots, always ready to lend an ear (or a microphone) when you need it. They’re like that friend who’s always available for a 3 AM chat, but without the awkward “why are you calling me at 3 AM?” question.

Challenges and Ethical Considerations

It’s not all smooth sailing in the world of active listening AI. There are some choppy waters to navigate:

Privacy Concerns: These robots are collecting a lot of personal data. Concerns about surveillance and data security are rising as AI systems grow more integrated into our personal lives.

Emotional Dependency: There’s a risk of people becoming too attached to their AI companions. It’s like that movie “Her,” but hopefully with less awkward operating system romance.

Bias in AI: If not properly designed, these systems can perpetuate biases. We don’t want robots that only listen actively to certain groups of people.

The Future of Active Listening AI

So, where is this all headed? The future of active listening robots is looking pretty bright (and attentive):

More Human-like Interactions: As the technology improves, conversations with AI will become increasingly natural. Soon, you might not even realize you’re chatting with a robot until it doesn’t need a bathroom break.

Personalized Emotional Support: AI companions will adapt to individual users’ needs and preferences. These robots can provide custom-tailored emotional engagement to help users cope with stress, anxiety, and daily challenges.

Integration with Smart Homes: Imagine your whole house actively listening and responding to your needs. It’s like living in a sci-fi movie, but hopefully without the part where the house tries to take over the world.

Wrapping Up: The Attentive Revolution

Active listening robots are more than just a cool tech trend. They represent a significant leap towards creating AI that truly understands and responds to human needs. While they’re not replacing human connections, they’re opening up new possibilities for support, companionship, and assistance.

As this technology continues to evolve, we can look forward to a world where our digital companions are not just smart, but also empathetic and attentive. Just remember, when your robot friend starts finishing your sentences, it might be time to mix up your conversation topics a bit. After all, variety is the spice of life – even in the digital world!